Training Deep Neural Networks on a GPU

Importing Libraries

import torch

import torchvision

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.nn.functional as F

from torchvision.datasets import MNIST

from torchvision.transforms import ToTensor

from torchvision.utils import make_grid

from torch.utils.data.dataloader import DataLoader

from torch.utils.data import random_split

%matplotlib inline

matplotlib.rcParams['figure.facecolor'] = '#ffffff'

dataset = MNIST(root='data/', download=False, transform=ToTensor())

image, label = dataset[0]

image.permute(1, 2, 0).shape

image, label = dataset[0]

print('image.shape:', image.shape)

plt.imshow(image.permute(1, 2, 0), cmap='gray') # plt.imshow expects channels to be last dimension in an image tensor, so we use permute to reorder

print('label:', label)

len(dataset)

dataset[0]

image, label = dataset[143]

print('image.shape:', image.shape)

plt.imshow(image.permute(1, 2, 0), cmap='gray') # plt.imshow expects channels to be last dimension in an image tensor, so we use permute to reorder

print('label:', label)

val_size = 10000

train_size = len(dataset) - val_size

train_ds, val_ds = random_split(dataset, [train_size, val_size])

len(train_ds), len(val_ds)

batch_size = 128

train_loader = DataLoader(train_ds, batch_size, shuffle=True, num_workers=4, pin_memory=True)

val_loader = DataLoader(val_ds, batch_size*2, num_workers=4, pin_memory=True)

num_workers attribute tells the data loader instance how many sub-processes to use for data loading. By default, the num_workers value is set to zero, and a value of zero tells the loader to load the data inside the main process.

pin_memory (bool, optional) – If True, the data loader will copy tensors into CUDA pinned memory before returning them.

for images, _ in train_loader:

print('images.shape', images.shape)

print('grid.shape', make_grid(images, nrow=16).shape)

break

for images, _ in train_loader:

print('image.shape:', image.shape)

plt.figure(figsize=(16,8))

plt.axis('off')

plt.imshow(make_grid(images, nrow=16).permute((1, 2, 0)))

break

Hidden Layers, Activation function and Non-Linearity

for images, labels in train_loader:

print('images.shape', images.shape)

inputs = images.reshape(-1, 784)

print('inputs.shape', inputs.shape)

break

input_size = inputs.shape[-1]

#size of output from hidden layer is 32, can be inc or dec to change the learning capacity of model

hidden_size = 32

layer1 = nn.Linear(input_size, hidden_size ) # it will convert 784 to 32

inputs.shape

layer1_outputs = layer1(inputs)

print('layer1_outputs', layer1_outputs.shape)

layer1_outputs_direct = inputs @ layer1.weight.t() + layer1.bias

layer1_outputs_direct.shape

torch.allclose(layer1_outputs, layer1_outputs_direct, 1e-3)

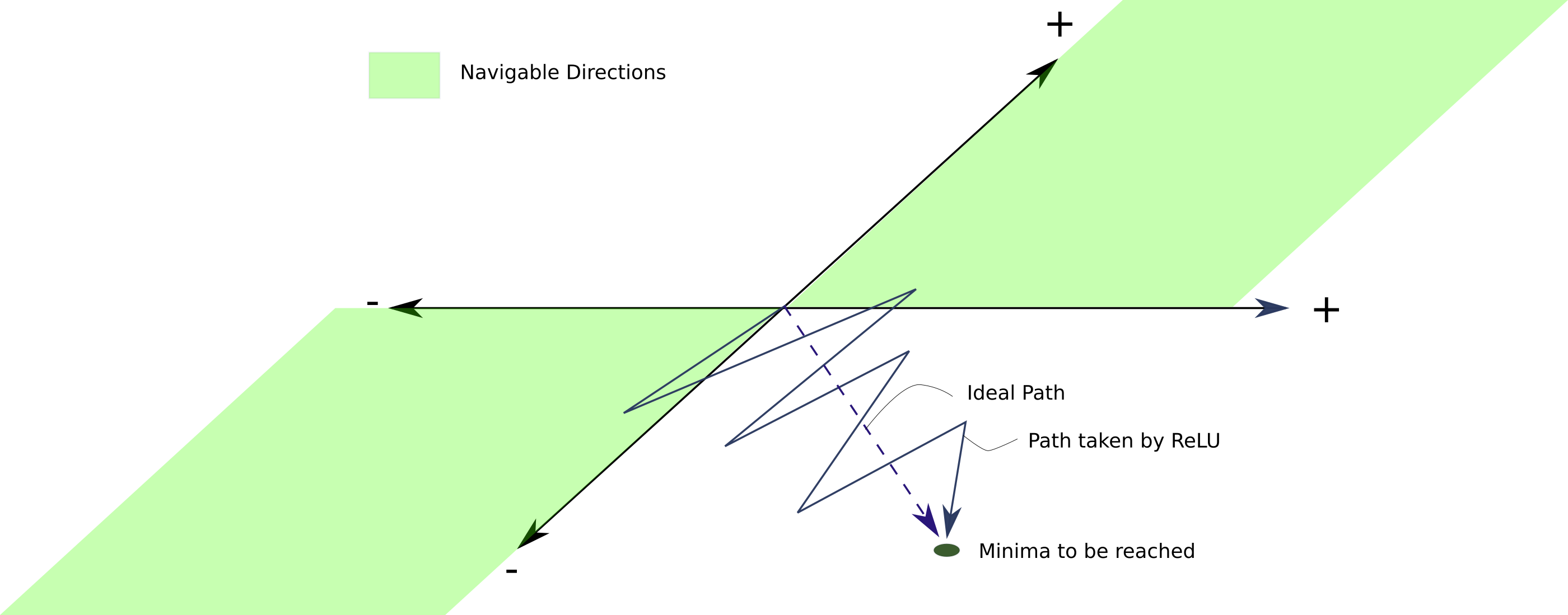

Thus, layer1_outputs and inputs have a linear relationship, i.e., each element of layer_outputs is a weighted sum of elements from inputs. Thus, even as we train the model and modify the weights, layer1 can only capture linear relationships between inputs and outputs.

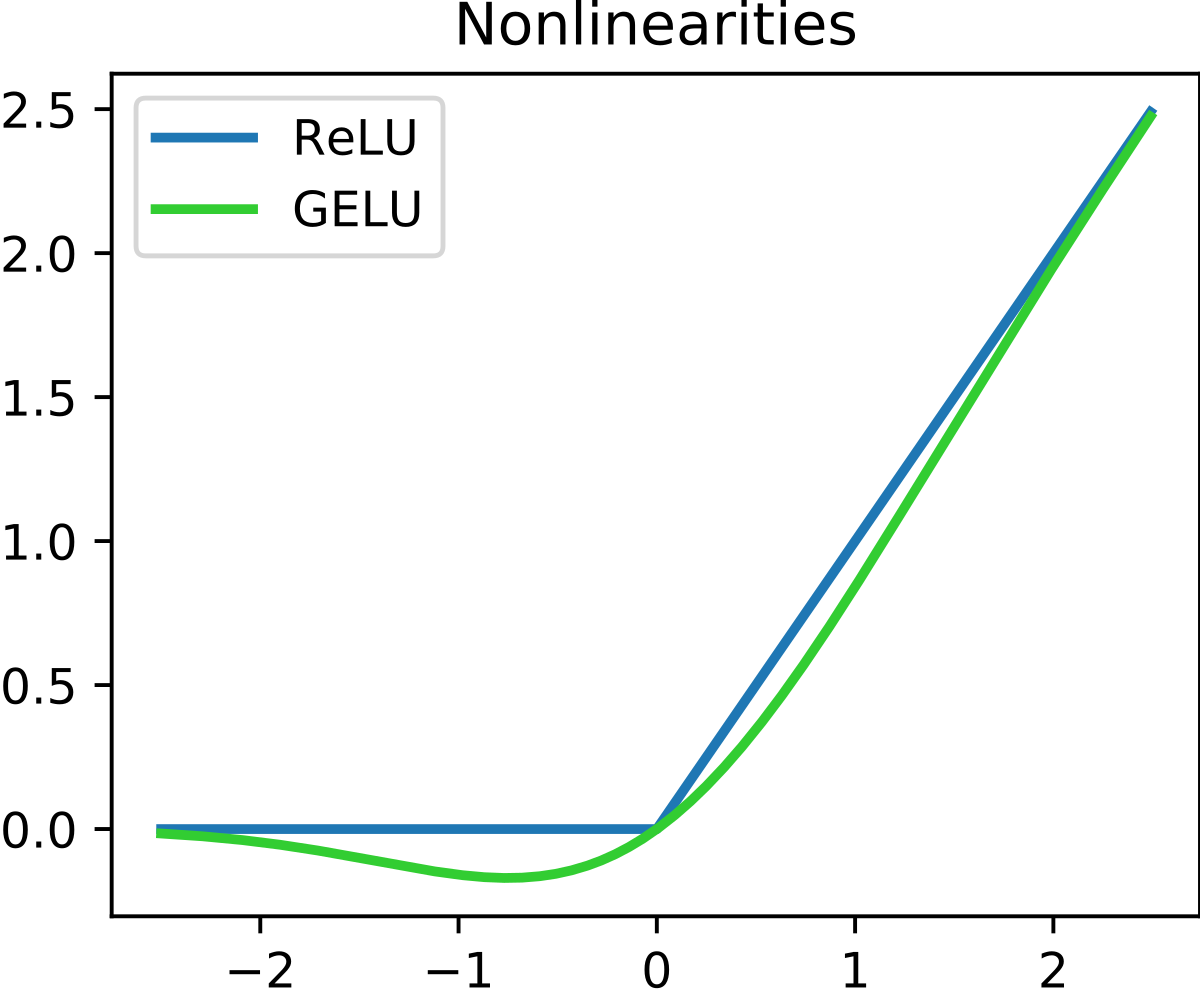

Next, we'll use the Rectified Linear Unit (ReLU) function as the activation function for the outputs. It has the formula relu(x) = max(0,x) i.e. it simply replaces negative values in a given tensor with the value 0. ReLU is a non-linear function

We can use the F.relu method to apply ReLU to the elements of a tensor.

F.relu(torch.tensor([[1, -1, 0],

[-0.1, .2, 3]]))

layer1_outputs.shape

relu_outputs = F.relu(layer1_outputs)

print('relu_outputs.shape:', relu_outputs.shape)

print('min(layer1_outputs):', torch.min(layer1_outputs).item())

print('min(relu_outputs):', torch.min(relu_outputs).item())

output_size = 10

layer2 = nn.Linear(hidden_size, output_size)

layer2_outputs = layer2(relu_outputs)

print('relu_outputs.shape:', relu_outputs.shape)

print('layer2_outputs.shape:', layer2_outputs.shape)

inputs.shape

F.cross_entropy(layer2_outputs, labels)

outputs = (F.relu(inputs @ layer1.weight.t() +layer1.bias)) @ layer2.weight.t() + layer2.bias

torch.allclose(outputs, layer2_outputs, 1e-3)

if we hadn't included a non-linear activation between the two linear layers, the final relationship b/w inputs and outputs would be Linear

outputs2 = (inputs @ layer1.weight.t() + layer1.bias) @ layer2.weight.t() + layer2.bias

combined_layer = nn.Linear(input_size, output_size)

combined_layer.weight.data = layer2.weight @ layer1.weight

combined_layer.bias.data = layer1.bias @ layer2.weight.t() + layer2.bias

outputs3 = inputs @ combined_layer.weight.t() + combined_layer.bias

torch.allclose(outputs2, outputs3, 1e-3)

Model

We are now ready to define our model. As discussed above, we'll create a neural network with one hidden layer. Here's what that means:

Instead of using a single

nn.Linearobject to transform a batch of inputs (pixel intensities) into outputs (class probabilities), we'll use twonn.Linearobjects. Each of these is called a layer in the network.The first layer (also known as the hidden layer) will transform the input matrix of shape

batch_size x 784into an intermediate output matrix of shapebatch_size x hidden_size. The parameterhidden_sizecan be configured manually (e.g., 32 or 64).We'll then apply a non-linear activation function to the intermediate outputs. The activation function transforms individual elements of the matrix.

The result of the activation function, which is also of size

batch_size x hidden_size, is passed into the second layer (also known as the output layer). The second layer transforms it into a matrix of sizebatch_size x 10. We can use this output to compute the loss and adjust weights using gradient descent.

As discussed above, our model will contain one hidden layer. Here's what it looks like visually:

Let's define the model by extending the nn.Module class from PyTorch.

class MnistModel(nn.Module):

def __init__(self, in_size, hidden_size, out_size):

super().__init__()

self.Linear1 = nn.Linear(in_size, hidden_size) # hidden layer

self.Linear2 = nn.Linear(hidden_size, out_size) # output layer

def forward(self, xb):

xb = xb.view(xb.size(0),-1) # flatten image tensor

out = self.Linear1(xb) # intermediate outputs using hidden layer

out = F.relu(out) # applying activation function

out = self.Linear2(out) # predictions using o/p layer

return out

def training_step(self, batch):

images, labels = batch

out = self(images)

loss = F.cross_entropy(out, labels)

return loss

def validation_step(self, batch):

images, labels = batch

out = self(images)

loss = F.cross_entropy(out, labels)

acc = accuracy(out, labels)

return {'val_loss': loss, 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_loss = [x['val_loss'] for x in outputs]

epoch_loss = torch.stack(batch_loss).mean() # Combine losses

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean() # combine accuracies

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch [{}], val_loss: {:4f}, val_acc: {:4f}".format(epoch, result['val_loss'], result['val_acc']))

def accuracy(outputs, labels):

_, preds = torch.max(outputs, dim=1)

return torch.tensor(torch.sum(preds == labels).item() / len(preds))

input_size = 784

hidden_size = 32

num_classes = 10

model = MnistModel(input_size, hidden_size, out_size = num_classes)

for t in model.parameters(): # weights and bias for linear layer and hidden layer

print(t.shape)

for images, labels in train_loader:

outputs = model(images)

break

loss = F.cross_entropy(outputs, labels)

print('Loss:', loss.item())

print('outputs.shape: ', outputs.shape)

print('Sample outputs :', outputs[:2].data)

torch.cuda.is_available()

def get_default_device():

if torch.cuda.is_available():

return torch.device('cuda')

else:

return torch.device('cpu')

device = get_default_device()

device

def to_device(data, device):

"""MOve tensors to chosen device"""

if isinstance(data, (list,tuple)):

return [to_device(x, device) for x in data]

return data.to(device, non_blocking = True) # to method

for images, labels in train_loader:

print(images.shape)

print(images.device)

images = to_device(images, device)

print(images.device)

break

DeviceDataLeader class to wrap our existing data loaders and move batches of data to the selected device, iter method to retrieve batches of data and an len to get number of batches

class DeviceDataLoader():

# wrap a dataloader to move data to device

def __init__(self, dl , device):

self.dl = dl

self.device = device

# yield a batch of data after moving it to device

def __iter__(self):

for b in self.dl:

yield to_device(b, self.device)

# number of batches

def __len__(self):

return len(self.dl)

# example

def some_numbers():

yield 10

yield 20

yield 30

for value in some_numbers():

print(value)

train_loader = DeviceDataLoader(train_loader, device)

val_loader = DeviceDataLoader(val_loader, device)

for xb, yb in val_loader:

print('xb.device:', xb.device)

print('yb:', yb)

break

Training part

def evaluate(model, val_loader):

outputs = [model.validation_step(batch) for batch in val_loader]

return model.validation_epoch_end(outputs)

def fit(epochs, lr, model, train_loader, val_loader, opt_func = torch.optim.SGD):

history = []

optimizer = opt_func(model.parameters(), lr)

for epoch in range(epochs):

#training phase

for batch in train_loader:

loss = model.training_step(batch)

loss.backward()

optimizer.step()

optimizer.zero_grad()

#validation phase

result = evaluate(model, val_loader)

model.epoch_end(epoch, result)

history.append(result)

return history

model = MnistModel(input_size, hidden_size= hidden_size, out_size=num_classes)

to_device(model, device)

history = [evaluate(model, val_loader)]

history

history += fit(5, 0.5, model, train_loader, val_loader)

try with more less lr

history += fit(5, 0.1, model, train_loader, val_loader)

losses = [x['val_loss'] for x in history]

plt.plot(losses, '-x')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.title('Loss v/s Epochs')

accuracies = [x['val_acc'] for x in history]

plt.plot(accuracies, '-x')

plt.xlabel('epochs')

plt.ylabel('accuracies')

plt.title('Accuracies v/s Epochs')

test_dataset = MNIST(root='data/',

train=False,

transform=ToTensor())

def predict_image(img, model):

xb = to_device(img.unsqueeze(0), device)

print(xb.device)

yb = model(xb)

_, preds = torch.max(yb, dim=1)

return preds[0].item()

img, label = test_dataset[0]

plt.imshow(img[0], cmap='gray')

print('Label:', label, 'Prediction:', predict_image(img, model))

img, label = test_dataset[123]

plt.imshow(img[0], cmap='gray')

print('Label:', label, 'Prediction:', predict_image(img, model))

img, label = test_dataset[183]

plt.imshow(img[0], cmap='gray')

print('Label:', label, 'Prediction:', predict_image(img, model))

test_loader = DeviceDataLoader(DataLoader(test_dataset, batch_size=256), device)

result = evaluate(model, test_loader)

result

torch.save(model.state_dict(), 'mnist-feedforward.pth')